How Not to Use AI? (At Work)

A framework to avoid net negative contribution when using AI at work.

TL;DR

At work, don’t remain a peer to AI (tool), “act” like a manager. And, when building meaningful AI products, first get a good idea of how a human (or a bunch of them) would do the job.

This article was triggered by a pattern I’ve started noticing: AI is often getting used inefficiently. I see it in two ways; first, in personal use at work (mainly from a white-collar worker’s perspective), and second, in (sidenote: This will be covered in a follow-up article (Part II).) .

Nonetheless, this is based on my personal, anecdotal observations of people at work (and outside of it), primarily for “text” generation using AI tools.

Part I: When using AI at work

Over the past two years or so, we have observed a gradual increase in the use of AI at work.

We have gone from a handful of people posting about some model or tool you-can-not-recall-the-name-now in a less-visited Slack channel, to almost everyone having a ChatGPT? tab open.

As a result, another shift is underway.

The so-called “AI slop” has entered the workplace and is spreading. Basically, it isn’t just limited to social media photos or videos.

AI Slop at Workplace

Multiple definitions are emerging for “AI Slop,” and while we haven’t yet settled on a stable one, there has been progress, and it’s being noticed.

In June 2025, the Cambridge Dictionary added an AI-related definition of “slop.”

Content on the internet that is of very low quality, especially when it is created by artificial intelligence.

Another school of thought defines it as “AI content that is generated and shared without any meaningful human judgment,” and that’s exactly the pattern that triggered me.

Lately, I have noticed a few patterns:

- The average length of documents and emails has increased.

- Not alarmingly so, but people have started writing Slack/Teams responses with LLMs. (and it’s not just to fix the grammar.)

- There are numerous meeting note-takers, but only about 1 in 10 recorded meetings ever gets watched again.

- Many discussions and brainstorms now start with a meeting summary or transcript, which often goes through multiple rounds of information loss as it’s summarized and re-expanded by different stakeholders. [arXiv:2509.04438 , arXiv:2401.16475 ]

- It’s hard to fully trust meeting summaries, transcripts, or notes when you need takeaways for a high-stakes or critical action item. (In such cases, I still end up watching the actual meeting; even if it’s at 1.75x speed.)

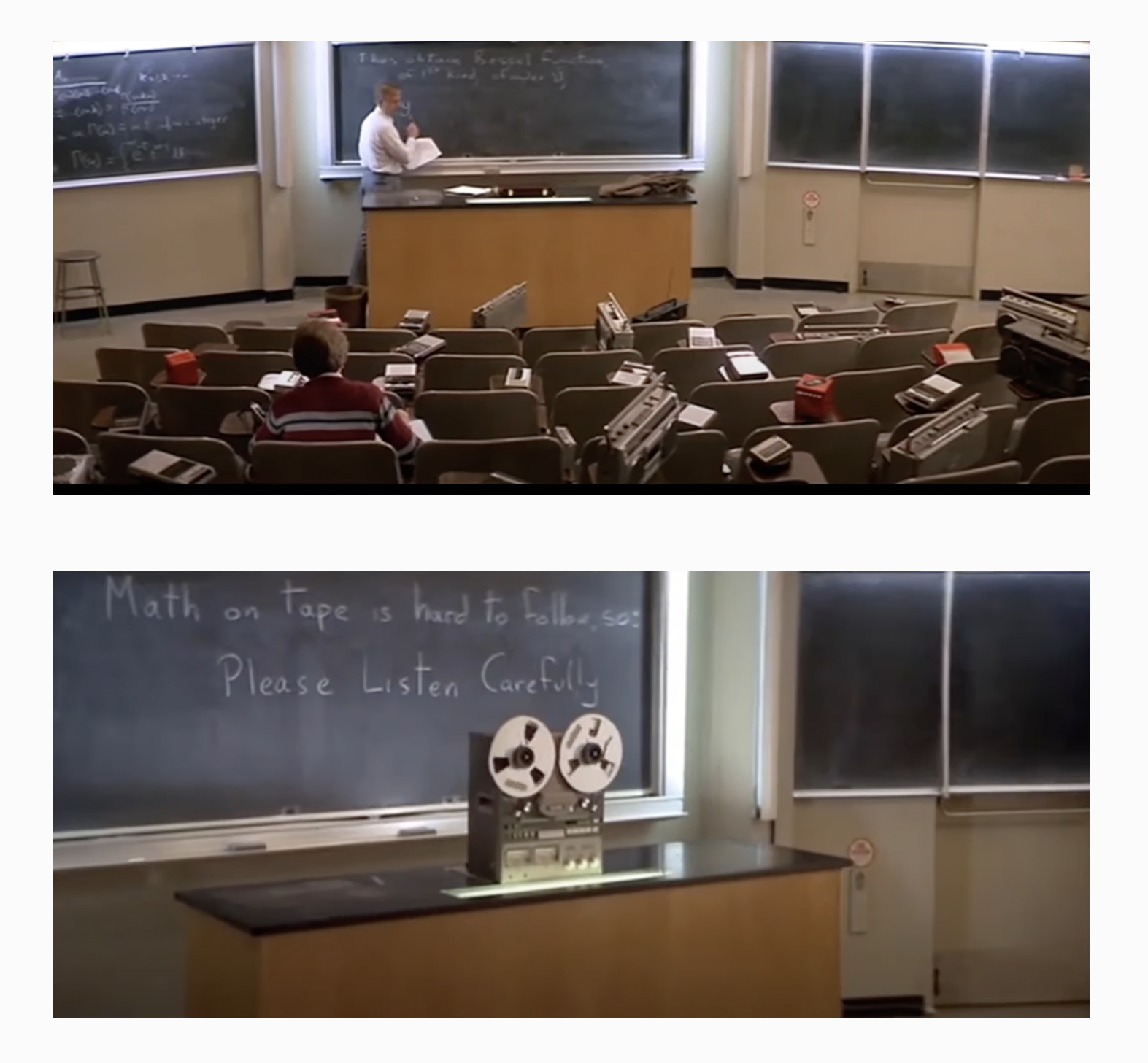

People have been quick to point out that the excessive use of meeting summarisation resembles a scene from the 1985 movie Real Genius, where the teacher plays a tape-recorded lesson in class, because students had stopped attending class and were just recording his lessons.

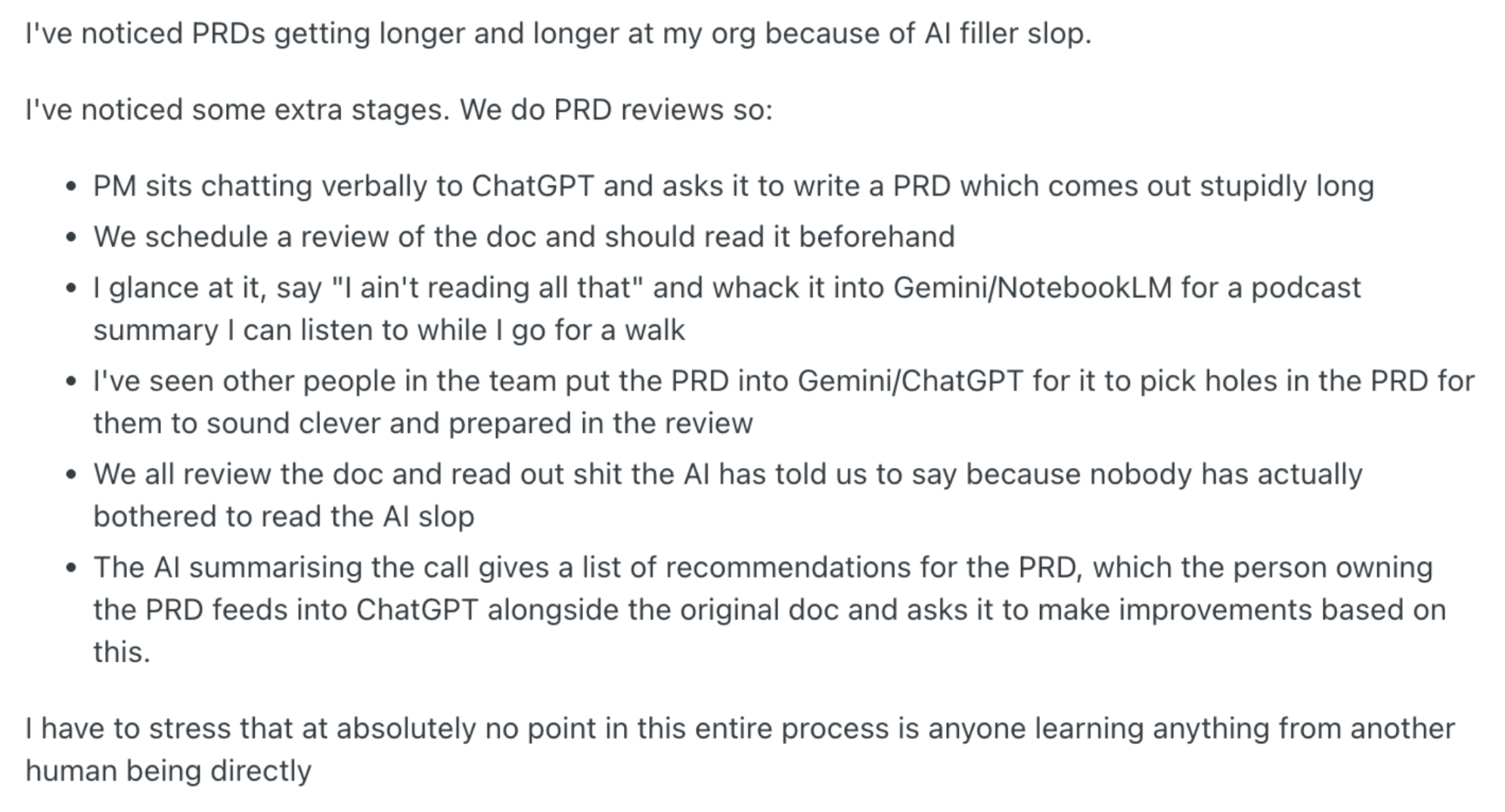

Here is a relevant comment on a similar thread regarding “AI Slop in Product Management” from u/demeschor.

Initial Reaction to Slop

Over the last few years, I have almost trained a subconscious classifier, with a good recall and precision, to identify whenever something at work has been written by an LLM. (can’t make the same tall claim for content outside the workplace or on the internet in general).

Why do I say I have a good recall and precision?

I wouldn’t say the same for other media types generated with AI, but for text, primarily, two features/behaviours give me the required confidence.

- People often leave the content unattended and pass it along directly from LLMs; most of the time, with the same formatting. We have all noticed that the supply of “Em Dashes” is at an all-time high, to the extent that genuine writers are shying away from using them.

- Or perhaps because I have spent a significant time with the same/similar individuals, it’s easy to notice a change in their writing before/after the adoption of LLM tools.

The moment I come across an artifact flagged by this filter, my first reaction is to think about how I want to consume it.

In critical one-way door decisions, such as an email response to an important customer or a scope/strategy document where the cost of missing an edge case is high, you risk contributing to “AI Slop” if you just stop at looking at an AI-summarised version of the artifact.

In those scenarios, it’s important to spot the gaps and put in the effort beyond just reviewing the summary.

Suggestion: Be a manager to your “AI”

You have to understand that if you relied only on an LLM to prepare for a meeting, and it went badly because you didn’t apply the required common sense, you’re responsible for the lost deal.

The same goes for the database that gets wiped out in production because of your vibe-coding AI tool.

Tweet

When it comes to AI tools, a default relationship is to treat them as a co-worker. A multitude of user experiences are designed to maintain a peer-level relationship.

In such a setup, information exchange is made extremely easy. For example, AI tools have started to live closer to where work-related data is present (i.e., Slack AI, App Connectors, etc.)

But there is little focus on reviewing one’s work.

- Be it you (user) reviewing the AI output, or

- The AI tool reviewing your quality of prompt and suggesting a better version (or asking deeper questions, similar to what Deep Research does to some extent).

I expect the latter to improve in both enterprise and consumer applications.

So, in summary, you’re the one responsible, and when it comes to saving from sloppy work, you’re pretty much on your own.

A good starting point is to outline responsibilities. You don’t want to believe that only having a smart team member (AI tool) by your side will guarantee success. We’ve all seen how that plays out.

Organically, you’re supposed to act like a manager because (sidenote: (See Andy Grove’s book High Output Management.))

Now, let’s refer to two other key concepts from the same book.

Concept-1: Task-Relevant Maturity

As a manager, you give more guidance when someone is new or inexperienced at a task, and step back as they get better at it.

In the context of this article, a few examples include:

- Choosing the right model for the right task, and

- Knowing when you have to go deeper vs. when it’s safe to rely on surface-level work done by the tools.

Concept-2: Managerial Leverage

As a manager, you focus on the few actions that create the biggest impact and avoid spending time on low-value work.

In the context of this article, your two most important high-leverage activities are

- Giving the AI tool the right context regarding the problem statement, and

- Knowing when the output is meeting the required standard.

Conclusion

Overall, we need to understand and avoid producing “AI Slop” at work, because it creates a net negative contribution.

In the current environment, it’s also important to develop good taste [1 , 2 , 3 ]. Without it, people produce subpar output even when they have a smart AI tool at hand. They simply don’t realize when they’re crossing into the sloppy territory.

As Simon Wilson puts it, sharing unreviewed content that has been artificially generated with other people is rude .